I am a bit confused about what you are asking here. Oh yeah and I am creating my metadb's on s7 which is partitioned for 25MB on all 8 drives. So how do I set up the RAID to mount / (root) as 1 RAIDed drive (/dev/md/rdsk/d100)? When I comment out the line with / (root) mounted on /dev/rdsk/c0t0d0s0 and try to mount / (root) on /dev/md/rsk/d100, the server does not boot, and doesn't let me vi /etc/vfstab to uncomment that out because it says that it's a read-only file and I have to start all over.īy the way I am running Solaris 2.6 on an E450 server and am running Solstice DiskSuite to manage the RAID. I am trying to set up RAID 0+1 on c0t0d0s0 to go accross all 8 drives on slice 0.īut, when I create the RAID on /dev/md/rdsk/d100, I can't mount it as / (root) because it's being used/mounted by c0t0d0s0. Initial RAID 0+1 Setup Question I have 8 36GB drives which during the OS install, I partition as follows Īll the other slices I leave blank so I only have a / (root) partition on slice 0. They'll continue to be "one-way" mirror devices.ĭarren I avoid leaving metadevices of one disk for the sole reason that I forget these are not mirrored, and don't remember until years later a single drive takes out what I thought was a mirrored pair. Besides not needing a reboot, it lets you mirror in the future easily by reattaching a disk. You can leave the mirror devices mounted. I'd just detatch the submirrors and reuse them. We did this to our /opt partitions back when the F-280s were new, now 7yrs later I'm going back to fully mirrored as the drives are getting old.Ī bonus feature of the F-280 is that it has FC disks, so the boot alias is not for a SCSI ID (determied by slot in an E class or V class box) but the WWNN of the drive, so if you need to change your boot disk you will also need to change the boot alias in the OPENBOOTPROM. The other forceload of md stuff can be left in. Root partiotion requires an addtional step, you need to comment out this section in /etc/system. User format to make sure you have the correct ctdt #s Reboot and metaclear the old metadevices ( # metaclear d4 ) dev/md/dsk/d5 /dev/md/rdsk/d5 /opt ufs 2 yes. dev/md/dsk/d3 /dev/md/rdsk/d3 /var ufs 1 no. dev/md/dsk/d4 /dev/md/rdsk/d4 /usr ufs 1 no. Is this possible? Has anyone done this before without reloading the OS?Ĭheckers Using Disk Suite it is very easy, edit your /etc/vfstab and replace the md entries with the ctdt entries

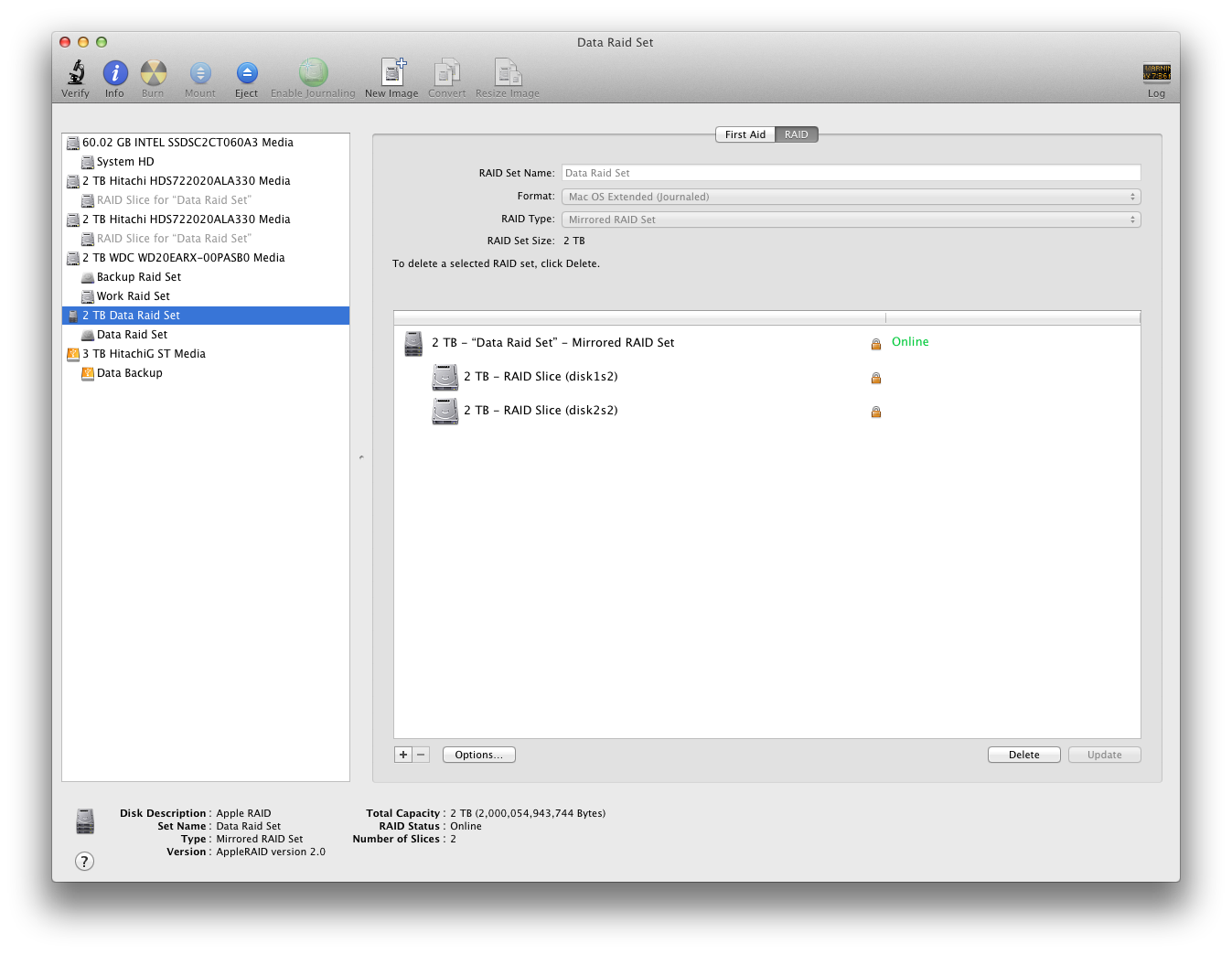

OS 10ĭisk space is running low so I'd like to break the mirror and use the 2nd HD for storage. I have a SF 280R was previously configured RAID 1 2x36GB.

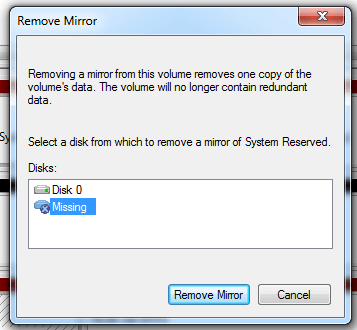

At one time SoftRAID actually supported multiple partitions on a drive, each participating in a different RAID (messy).How to break mirror disks? - Solaris 10 Hello, The subtle implication being that the drive being removed contains one partition maximum, which was used in that mirror. In some situations the drive might be moved off-site as a disaster recovery. I *think* this was intended to be used in a situation where greater than two mirrors were in use, because you would still want an active pair for mirror protection.

Softraid split mirror full#

The intention of that feature was to physically pull a (presumably hot-swappable) drive from an enclosure, take it somewhere else for backup (or whatever), then reintroduce it into the RAID (with the full understanding that the returning drive would require a re-sync to the active array). It might work sometimes or even most of the time but it's definitely walking a pretty fine line between success and failure failure being the wrong disk is marked as degraded and your changes are lost. Taking a disk out of a mirror, changing it and putting it back is a recipe for lost data.

It can be added back to its original volume at any time" This is a write-protected volume, which can be moved to another system for backup. One of the added features is the ability to split off a Read-only secondary volume. SoftRAID 3 allows multiple drive mirrors, and the ability to split one or more disks at a time from the volume. "Split Mirror - We have added important functionality to this feature. This was a documented feature of SoftRAID:

0 kommentar(er)

0 kommentar(er)